It is about time to write up something about the recent works (post 1, post 2) that I have done with OpenCV and the classic film sequences. I started the recent version of the project first for the submission to the Image Conference in Berlin and then the Microworld exhibition in Hong Kong. The software I have been testing is the OpenCV library, openFrameworks and Processing. For the final show, I may choose openFrameworks for performance and stability reasons.

The two functions I explored in the OpenCV library are the Motion Template and Optical Flow. Both of them are in the Motion Analysis and Object Tracking library of the current OpenCV distribution. The Motion Template function maintains motion history of consecutive frames and identifies the magnitude and direction of major movements within the camera frame. I have used it in the last project, Movement in Void – A Tribute to TV-Buddha, to track the swinging direction of the hanging ball equipped with a downward pointing webcam.

The interface screen actually shows the motion direction and magnitude with a circle and a pointer.

For the optical flow function, it samples a few pixels from one video frame and then traces their new positions in the next video frame. In the end, it will generate a map of vectors (like tiny pointers) indicating the directions where the pixels flow. I started playing around with optical flow in developing and teaching of a very old course, Image Processing and Augmented Reality Applications, back in the School of Creative Media, City University of Hong Kong. The final deliverable was to create a multi-touch interface by just waving your hands in front of a webcam.

The algorithm is very crude at that moment. It used brute force method to enumerate colour information of all pixels in a predefined neighborhood, and looked for the closest match. Before coming up with the final version, I had great fun of using the optical flow information for creative applications.

This is one of the directions I have investigated and experimented. Rather than writing another Photoshop filter, I try to see if I can use existing images, from still photos, digital videos, or live camera feeds, and render them for creative outputs. In my studies in RMIT, there is a side dish of using image processing technique for creative rendering.

In most of my artworks, I look for raw data as inputs and then transform them into perceivable forms, such as physical objects or imagery. Digital images are one of the sources. Computer software does not understand the image content. It can, however, identify groupings of colours, sudden changes in colours, distributions of colours within a single frame of image. For example, face detection is one of the computer vision application that identifies potential human faces in an image, and which I have used in the artwork, I Second that e-Motion.

For movement information, we need to compare one frame with others. To identify the presence or absence of a foreground figure, we compare the current frame against the background image. To identify movement, we compare the current frame against the previous frame. Motion Template and Optical Flow are ways to identify and understand movement. The movement information we obtain can be used for interaction design or creative visualizations.

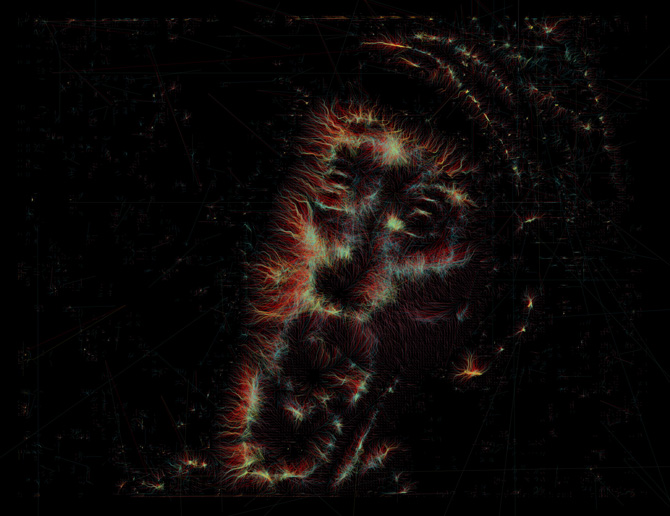

Hector Rodriguez, a former colleague in School of Creative Media, has also worked on optical flow to produce a number of innovative artworks, ranging from simpler renderings of classic films in Flowpoints to sophisticated categorization and comparison of movement information of film clips maintained in a database, as in the Gestus Project.

Gestus, Hector Rodriguez

Benjamin Crosser created the project, Computers Watching Movies. Even though the artist does not disclose much about the custom computer vision software, I believe there is motion analysis component in it.

Frederic Brodbeck‘s Cinematric aims to create a unique fingerprint for each classic film. By taking into considerations of motion, speech, colours, and editing, he generates an info-graphic visualization for each individual film. He also released his Python codes in Github.

There are a number of colour analysis activities for the Zhang Yimou’s Hero, maybe due to his purposely done colour narrative.

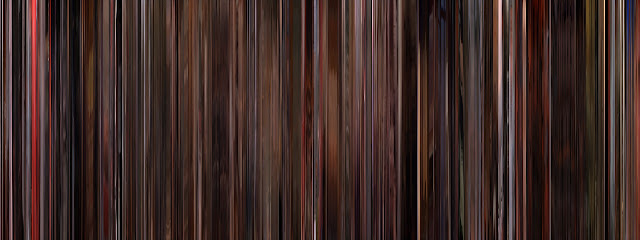

Another example is the MovieBarCode project. Taking similar approach of the slit scan effect, it compresses temporal sequence of a whole film into a rectangle. Each frame is now a single pixel width strip.

Here are the examples from Kieslowski’s films of three colours.

For my creation, I try to enhance the software to recognize different forms of movements within the film sequences, e.g. characters’ motion, camera pan, tilt, dolly, editing cut, etc. The visualization will mostly be a particles system that organically responds to the movement data and flows continuously from one film to another. I do not intend to select the films by myself but resort to popular voting of classic film sequences in the Internet websites. Currently, I am using the IGN database.

Stay tuned!